-

-

The New Horizon for Data Rights: New Perspectives on Privacy, Security, and the Public Good

-

CLTC Research Exchange, Day 3: Long-Term Security Implications of AI/ML Systems

-

AI Race(s) to the Bottom? A Panel Discussion

-

New CLTC Report: “Decision Points in AI Governance”

-

CLTC Co-Hosts Book Talk on “Human Compatible: AI and the Problem of Control”

-

2019 Annual BCLT/BTLJ Symposium – Governing Machines: Defining and Enforcing Public Policy Values in AI Systems

-

Media Round-Up: CLTC in the News

-

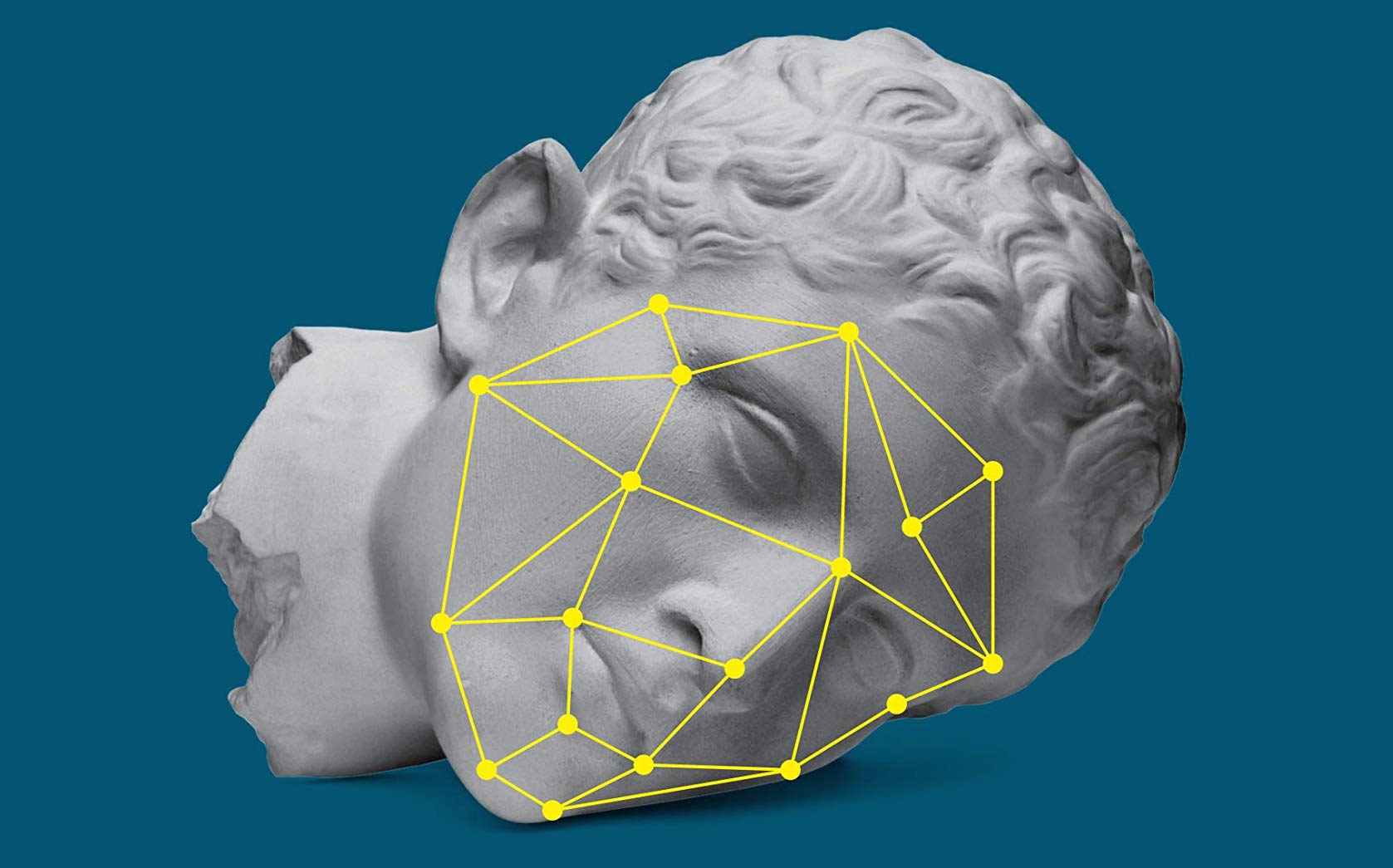

New Report: “Toward AI Security: Global Aspirations for a More Resilient Future”