As threats to digital security and privacy continue to evolve, secure communication has never been more important. Journalists and activists face a constant threat of surveillance, and while privacy experts often recommend messaging apps like Signal or use terms like “zero-knowledge encryption” and “privacy by design,” these terms can be difficult to understand.

To examine the current state of secure communications, the UC Berkeley Cybersecurity Clinic and Center for Long-Term Cybersecurity hosted a panel discussion on February 20, 2025 featuring three professionals whose work centers on promoting online privacy and freedom of information.

The panel featured Martin Shelton, Deputy Director of Digital Security at Freedom of the Press Foundation, an organization that works to protect journalists and their sources; Glenn Sorrentino, Executive Director of Science & Design, Inc. a non-profit that builds tools to enable transparency and free access to information; and Holmes Wilson, co-founder and board member of Fight for the Future, an organization that advocates for internet freedom.

“I thought it would be good to convene people together who are in the industry to have an open conversation about secure communication,” explained Elijah Baucom, founder of Everyday Security and Director of the UC Berkeley Cybersecurity Clinic, a class in the School of Information that trains and partners with UC Berkeley students to support outside organizations that may be vulnerable to surveillance and cyberattacks.

A Baseline of Terminology

Before introducing the panelists, Baucom provided an overview of key terms that are commonly used in the context of secure communication, including “zero-knowledge encryption,” a type of encryption that “ensures that even the creator of the software does not have the keys to decrypt traffic.” He explained that zero-knowledge encryption, which is used by apps like Signal, is more secure than “end-to-end” encryption, which is used by apps like WhatsApp.

Baucom also explained the term “open source software,” i.e., software whose source code is freely available to anyone, allowing users to modify, use, and distribute it under defined license and terms. “One of the most beautiful things about open source software is that a lot of times you can host it yourself,” he said. “You can host it for your own community, versus some of the big tech alternatives that you have less control over.”

Other key terms include “privacy by design,” used to describe software designed with privacy as a core pillar, rather than an afterthought; “surveillance capitalism,” used to describe how data is a product and many companies capitalize off private data; and “decentralized computing,” an architecture in which multiple nodes are spread across different locations, without a single central authority.

Keeping Data Safe from Third Parties

The panel’s first speaker was Martin Shelton, a member of the digital security team at the Freedom of the Press Foundation (FPF) who leads research into the security needs and concerns of journalists, as well as digital security education in schools of journalism (J schools). Shelton also leads FPF’s security editorial efforts, including the digital security digest newsletter and the U.S. J-school digital security curriculum.

In his talk, Shelton explained that his work centers around supporting journalists and improving their digital security practices to ensure privacy and anonymity. “Who gets visibility into the connections that you’re making in the course of using some of these tools? This is something that we talk to journalists a lot about on our team.”

Shelton explained that FPF offers digital trainings for journalists, as well as resources with explanations on how to navigate different tools. They also offer resources on “navigating the times when we have to make a compromise” because you may need to communicate with “somebody who refuses to get on the platform that you want to be on.”

He stressed that most commonly used communication platforms, including Gmail, direct messages (DMs), or phone calls, are not secure, because third parties — including internet service providers or phone companies — could gain access. “If you are connecting to most service providers, chances are they are going to be able to see the messages that you’re sending,” Shelton said. “We have to understand, what are the different actors in play, and who gets visibility into what?”

Even when outside parties may not have access to the content of communications, they may be able to deduce a significant amount of information from the “metadata,” the information about who is sending what to whom, when, how often, and under what circumstances. “How frequently people are talking can be really useful information to somebody who is trying to suss out what’s happening in a conversation,” Shelton said. “For a lot of the groups who we support, that is enough to put somebody in prison. Just somebody simply having a conversation with a journalist, depending on your role, even if you don’t see what’s in the messages, might be enough to follow up and say, I want to send a subpoena to the service provider.”

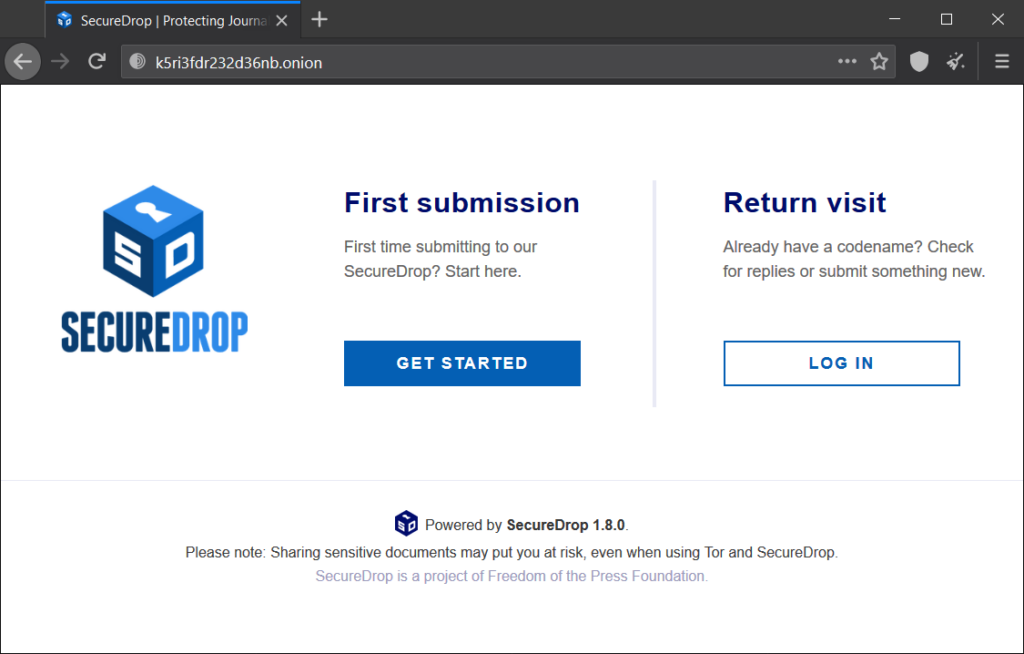

In addition to legal requests to access information, journalists need to be concerned about hackers gaining access to their accounts, which makes the use of apps like Signal all the more important. Other alternatives for sharing information include SecureDrop, a tool specifically designed to share information with media organizations.

“The truth is that there are so many diverse needs in the space, and everybody’s defensive needs are so different,” Shelton said. “We do not want to be married to any one of these tools. We want to better understand who specifically we are building things for, and how we better solve for their particular needs.”

Tools to Protect Whistleblowers

The second speaker on the panel was Glenn Sorrentino, Executive Director of Science & Design, Inc. and a member of the Board of Advisors to Distributed Denial of Secrets. His career has focused on product design, and he has contributed to a range of open-source tools, including Hush Line, Signal, OnionShare, and CalyxOS.

Sorrentino explained that many of the products he has developed are intended to help whistleblowers who seek to share sensitive information about their organizations. “Whistleblower is a dirty word, and part of the challenge for our organizations is, how do we communicate the thing that we’re doing in a safe and responsible way?” he said. “Whistleblowing is not something that comes lightly.“

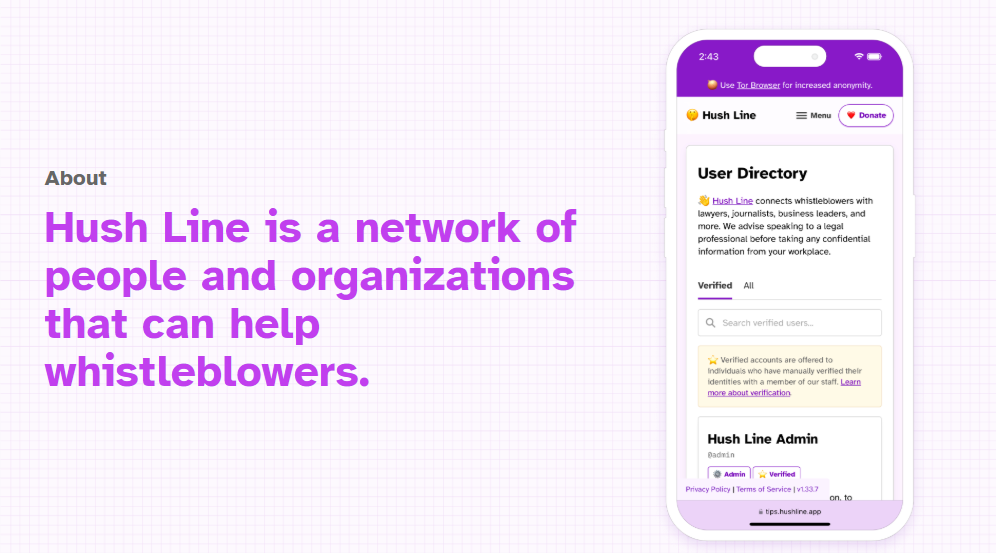

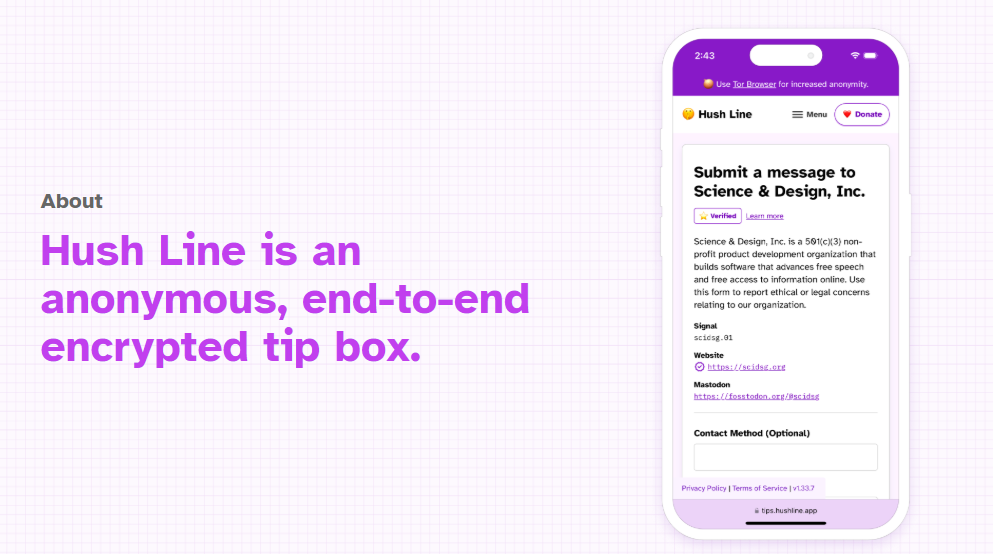

Among the tools available for whistleblowers is Hush Line, a platform that provides individuals and organizations with open-source, end-to-end encrypted, anonymous tip lines; Hush Line is designed to be used by journalists, lawyers, employers, and others. “It’s a generic, encrypted messaging platform that anyone can use, whether you’re a student group and you want an anonymous way for students to reach out to you, or whether you’re a board of directors wanting employees to reach out with ethics or legal concerns. But most importantly, we want to make it easy for a whistle blower who’s already in a very stressful position,” Sorrentino said.

“We’re trying to remove as many roadblocks or barriers as possible for the people that need help, including downloading software or creating an account,” Sorrentino said. “We don’t require tip line owners to supply us with any PII. We offer end-to-end encryption. We’re all open source, so if anyone wants to come and verify our code, you can. You can run it locally.”

Gaps Remaining in Secure Communication — and Lessons Learned from Past Victories

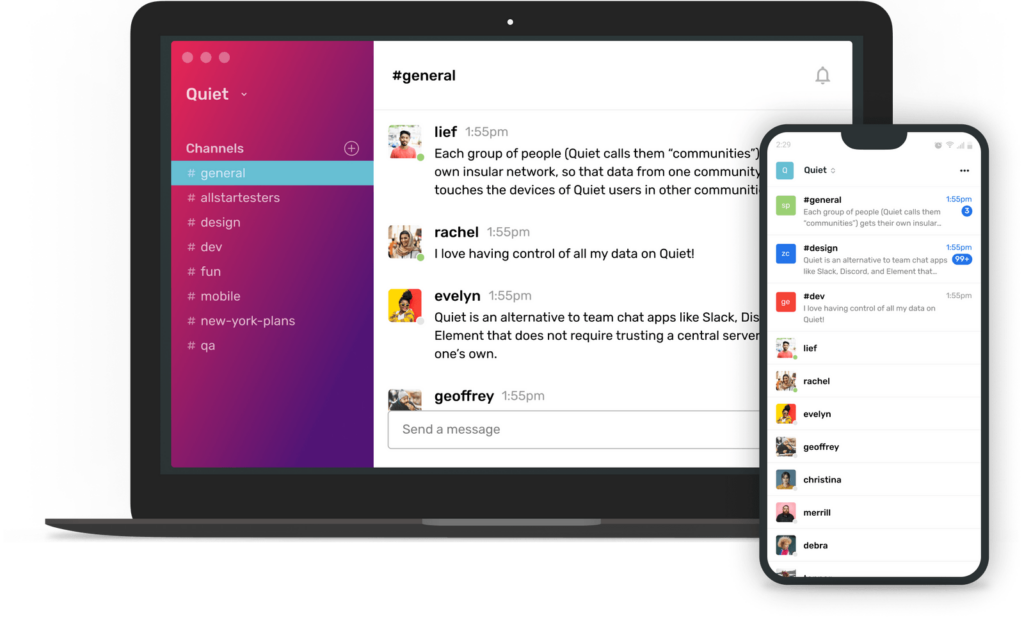

The final speaker at the panel was Holmes Wilson, an internet freedom activist whose work mixes mass mobilization and software tools. He is a co-founder and board member of Fight for the Future, the activism organization that was instrumental in defeating the infamous US site-blocking laws SOPA/PIPA, fighting for net neutrality rules in the US and Europe, opposing law enforcement crypto backdoors, and more recently challenging the use of face recognition tech by US law enforcement and products like Amazon Ring. He also previously co-founded Miro, a free software video player based on Bittorrent and RSS, and was a campaign manager at the Free Software Foundation. He is currently part of a team developing Quiet, a “Slackier Signal” for teams that need the security of Signal with the team features of Slack.

Wilson acknowledged that the state of secure communication is “vastly better than it was 20 years ago,” noting that the past two decades have ushered in “almost universal access to a lot of things — end-to-end encrypted messaging, transit layer encryption for web and email, disappearing messages, and and full disk encryption that’s on by default…. All these things were huge victories.”

But he added that “there’s still a ton more to do,” and he highlighted some of the remaining gaps.

“The first big gap here is that devices are way too hackable,” Wilson said. “When governments realized they couldn’t just snoop on stuff at the ISP layer and listen to people’s messages, they were like, ‘what if we just hack somebody’s phone? Then we can get all their messages. Or we can run code on their phone, and we can turn a microphone on and just listen to them talking in the room.'”

He explained that after some governments began hacking into people’s phones, “companies started selling this as a service to all governments, and now, in some ways, journalists and other high-profile users are more vulnerable than they were before…. So we need to make devices much harder to hack.“

A second gap, Wilson explained, is that because of metadata, “privacy guarantees are super hard…. If two people are communicating over the internet and someone can see internet traffic, it’s too easy for them to correlate who’s communicating with whom. We only have very costly ways of guaranteeing to people that who they’re communicating with is private.”

As a final gap, Wilson noted that “almost all these secure tools really fall down in situations where someone can force you with the threat of violence or other consequences to open up your phone. And that’s what we see happening a lot in authoritarian contexts, like Myanmar or in situations such as people facing an abusive domestic partner. Someone can just look in your phone. It sounds like it’s impossible to protect people’s security in that case, but actually, there are cool things you can do, and that’s a really important area.”

Wilson also detailed some “lessons we can get from past wins to think about planning future wins.”

“One huge lesson is that secure tools can compete in the marketplace, and secure features can compete in the marketplace with big tech,” he said. “People can come out with stuff that actually does reach billions of people. These technical solutions to theoretical problems can actually scale and have global impact.”

He also added a note encouraging students to explore finding solutions to emerging privacy challenges. “A solution might seem really theoretical…, but actually, if you do the engineering work, you can make it happen. In 2005, no one thought it was practical to get everybody using end-to-end encryption… but the Signal folks set to work and proved that it could happen. Right now, it seems like it’s a pipe dream to make our phones unhackable to governments, but there is actually a theoretical path there, and there is engineering work that could be done to make that possible.“