Investors, shareholders, and corporate boards who engage in AI oversight will gain earlier visibility into systemic exposures, sharpen their ability to price emerging risks, and set the standards for the AI era.

AI investment is booming. Global spending on artificial intelligence (AI) is projected to reach $1.5 trillion in 2025,1 signaling accelerated integration of AI into the products, services, and workflows of enterprises worldwide. AI is dominating venture capital (VC) investment. In 2024, global VC investment in AI rose to $368.3 billion across more than 35,000 deals,2 and U.S.-based VCs represented over $200 billion of this total.3 In the first half of 2025, AI startups received 64% of all VC dollars invested in the U.S.4

The volume and pace of capital formation signal a continuing surge in AI development and deployment. However, while widespread AI adoption has created new opportunities and generated considerable value for firms, it is not without risks, including those related to cybersecurity and business continuity. These risks require oversight from investors, intermediaries, and corporate boards alike.

For investors and corporate boards, engaging in oversight is particularly beneficial. It enables companies to capture efficiency gains, expand the number of viable AI use cases, and strengthen the performance of AI models, ultimately increasing both the value companies derive from AI and the overall value of AI-driven companies.

A collaborative research initiative

Since March 2025, we have been engaged in research on AI risk oversight for investors and corporate boards. Investor capital acts as a catalyst for the development and deployment of new AI systems across the economy. Corporate boards influence AI supply chains, model and infrastructure design, data and compute sourcing, and integration of AI into existing processes and operations. Together, these actors shape much of the risk environment, making them critical nodes in the governance of AI across the economy.

We have had conversations with investors of various types, including VCs (both traditional and corporate), sovereign wealth funds, and private equity. We’ve engaged with regulators across jurisdictions, including at the national and subnational levels. We have spoken extensively with AI developers and their boards, as well as with AI security researchers and practitioners.

These conversations form the basis for the project summarized in this blog post: how can investors establish governance processes that support AI safety, whether in the context of business continuity, misuse prevention, or system verification, both within their own workflows and in the operations of their portfolio companies? Our goal is to provide practical resources to help investors and boards establish oversight mechanisms that enhance the value of AI uses and improve security outcomes from deployed systems.

To ensure our research integrates a wide array of perspectives, the UC Berkeley Center for Long-Term Cybersecurity will host a virtual roundtable on this topic on December 8, 2025. We welcome requests for participation from investors and intermediaries, AI developers and their industry partners, corporate board members of companies deploying AI, global regulators and policymakers, AI security researchers, and members of the media. This event will take place under the Chatham House rule. To request an invitation, please contact cltcevents@berkeley.edu or oumoubly@berkeley.edu.

Why investors? Why boards? Why this impact space?

Investors play a key role in driving the widespread adoption and deployment of AI, but they often struggle to identify the full range of AI use cases within their operations and across their portfolios. This limited visibility is a significant barrier to creating risk oversight processes, but it also offers investors an opportunity to help firms better understand the risks and challenges associated with AI.

Similarly, companies increasingly use AI to replace existing technology and manual processes, but often need tools to inventory AI use cases, monitor AI integration into workflows, measure efficiency gains, and understand the risk potential of deployed systems. Engaging in oversight provides mechanisms for these activities.

Enhancing visibility into AI risk

To build toward more robust visibility into AI risk, investors need quantitative signals about how AI systems behave in the wild, and they need to be able to rely on their intermediaries, including fund managers and corporate boards, to collect this information.

Consider that in investment processes, asset managers and their intermediaries already perform due diligence on target companies. This often includes collecting information related to financial performance, operational continuity and controls, regulatory compliance, supply chains, and more. Through this process, investors shape company boards according to their mandates, including by holding board seats, helping set governance priorities, influencing and overseeing critical growth and operational plans, and engaging in stewardship.

The investment process includes several actors, starting with the limited partners (LPs) who provide capital to investors, the fund and asset managers who provide funds to firms, the boards of those firms, and the regulators who oversee the activities of each actor. Each of these stakeholders contributes to the AI value chain and plays a role in influencing how companies assess and engage with risk.

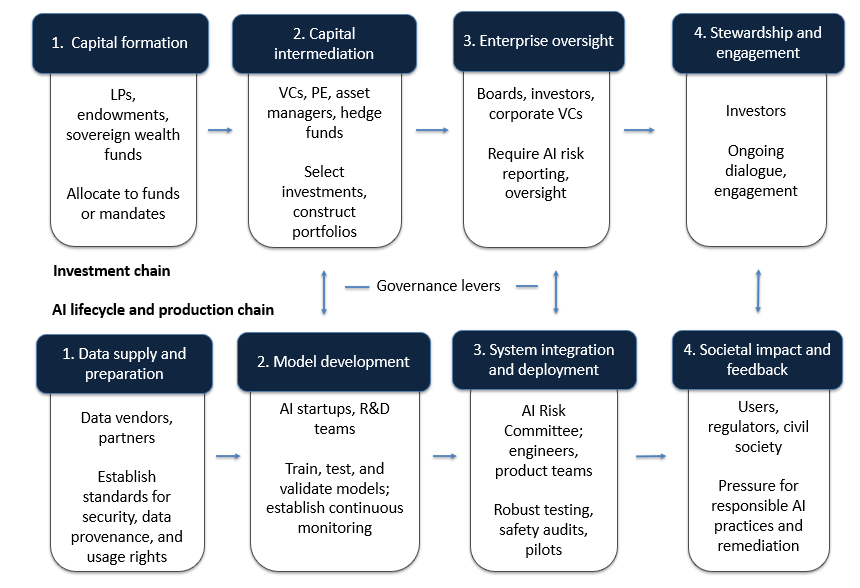

The investment process also catalyzes an AI product chain, which begins with investment and culminates in deployment. The figure below illustrates the investment process and AI product chain unfolding in tandem. It highlights opportunities for investor and board oversight, and includes mechanisms both groups can use to collect information about model behavior and performance over time.

Fig. 1: Mechanisms for risk oversight across the AI value chain

Importantly, product and value chains are recursive: investor actions shape funds’ and firms’ behavior, but feedback from technical incidents, regulatory requirements, and public opinion continuously influence the risk environment and investor expectations. This creates a feedback loop: investors gain deeper visibility into AI-related risks through oversight and are better equipped to refine disclosure requirements and improve monitoring practices over time.

For example, consider a corporate investor with a mandate to fund autonomous vehicle technologies. Its venture arm might require portfolio companies to train computer vision and sensor-fusion models using sensor data sourced from vendors that adhere to certain standards for provenance and data handling. This expectation both shifts risk away from the investor and signals to the broader market that secure data pipelines are requisite for accessing capital. Over time, these kinds of requirements cascade through the value chain and shape norms for safety and transparency.

These requirements also influence how stakeholders across the economy engage with risk, including whether they ultimately accept and respond to it, shift or reduce it, or avoid it altogether.5

Roles and responsibilities

While investors must understand and manage AI risk, effective oversight depends on clear security benchmarks across risk domains. Benchmarks make risk measurable and start the funnel of quantitative information about model behavior that ultimately reaches corporate boards and investors. Boards and industry developers need to collaborate to set standards across risk domains, which investors can use to more effectively govern risk across their portfolios.

In this way, investors and boards play interdependent roles in advancing responsible AI governance. When they work together, they position the rest of the value chain to maximize the upside of strong risk controls at the top of the chain.

A platform for dialogue

This project aims to serve as a convening platform for the stakeholder groups tackling these challenges, including investors, shareholders, boards, and AI security experts, and regulators. The December 8 roundtable will bring together a cross-sector group to examine the following core questions:

- How are corporate boards and investors currently defining “AI risk”?

- How are existing oversight, diligence, and risk management processes being used to build a picture of AI risk?

- How do existing risk prevention processes accommodate novel AI risks?

- What new safety and security standards does industry need to adopt to fill gaps in the existing risk oversight processes?

- How can those processes be extended and strengthened?

This project will culminate in a 2026 report publication providing investors and boards with frameworks for stronger governance. The December 8 convening will help define the priorities that shape that report.

Ultimately, the way we govern AI today will determine the stability of markets and the integrity of the systems that sustain our economies and societies. This work is one step toward getting that future right.

Notes

1. “Gartner Says Worldwide AI Spending Will Total $1.5 Trillion in 2025.” Gartner, September 17, 2025. https://www.gartner.com/en/newsroom/press-releases/2025-09-17-gartner-says-worldwide-ai-spending-will-total-1-point-5-trillion-in-2025.

2. KPMG. 2024. “2024 Global vc Investment Rises to $368 Billion as Investor Interest in AI Soars, While IPO Optimism Grows for 2025 according to KPMG Private Enterprise’s Venture Pulse.” KPMG. 2024. https://kpmg.com/xx/en/media/press-releases/2025/01/2024-global-vc-investment-rises-to-368-billion-dollars.html

3. Ibid.

4. Primack, Dan. “AI Is Eating Venture Capital, or at Least Its Dollars.” Axios, July 3, 2025. https://www.axios.com/2025/07/03/ai-startups-vc-investments.

5. Editor, CSRC Content. n.d. “Risk Response – Glossary | CSRC.” Csrc.nist.gov. https://csrc.nist.gov/glossary/term/risk_response.

About the Author

Oumou Ly is a Nonresident Research Fellow with the AI Security Initiative at UC Berkeley’s Center for Long-Term Cybersecurity, where she conducts applied research on the systemic risks that emerging technologies pose to global financial systems. From 2023 to January 2025, Oumou served as Senior Advisor for Technology and Ecosystem Security at the White House, where she was an author of the 2023 Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence and was instrumental in the development and implementation of the National Cyber Workforce and Education Strategy.

Previously, Oumou served as an advisor at the U.S. Cybersecurity and Infrastructure Security Agency and in the U.S. Senate. She has also conducted research at the Berkman Klein Center for Internet & Society at Harvard University.