- This event has passed.

A recap and recording of this event can be viewed here.

——

How are AI language models being used, and what kinds of harms are people experiencing? How can publication norms for AI language models help mitigate potential harms? How can we incentivize responsible AI research and development?

The trend in recent years to generate increasingly larger language models capable of writing and speaking in ways that appear shockingly human comes at a cost. And there is growing urgency within the AI community to better address these issues, including improving the documentation of risks and the monitoring of public uses.

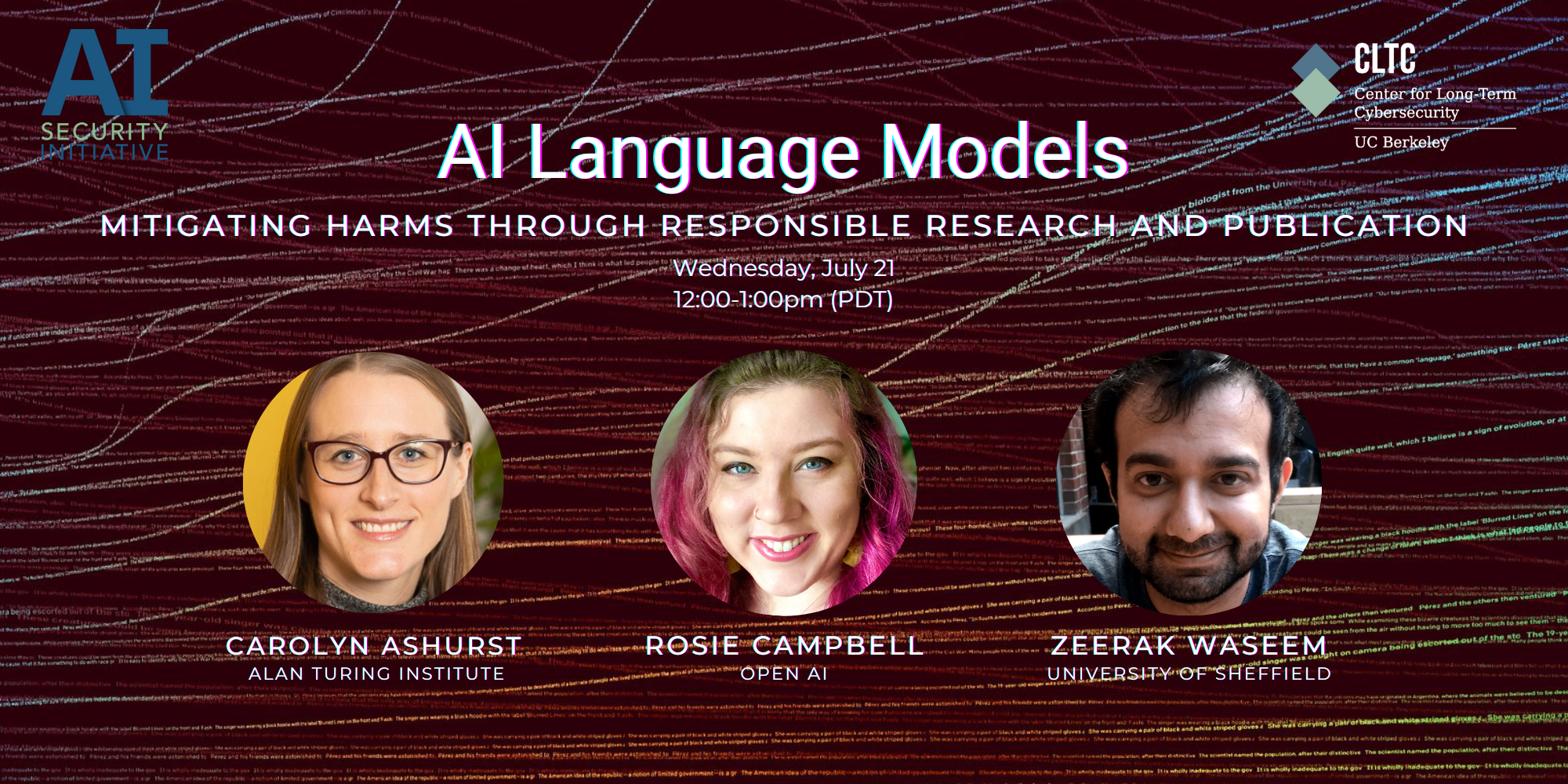

Join the AI Security Initiative at the UC Berkeley Center for Long-Term Cybersecurity on Wednesday, July 21 from 12:00-1:00pm (PT) for a discussion of these issues. Distinguished speakers Carolyn Ashurst, Senior Research Associate in Safe and Ethical AI at the Alan Turing Institute, Rosie Campbell, Technical Program Manager at OpenAI, and Zeerak Waseem, PhD candidate at the University of Sheffield, will share their perspectives from the front lines of AI research and development.