Artificial intelligence-based language models have advanced significantly in recent years, and are now capable of writing and speaking in ways that appear shockingly human. Yet for all their potential benefits, these technologies have myriad associated costs, and there is growing urgency within the AI community to address these issues, including improving the documentation of risks and the monitoring of public uses.

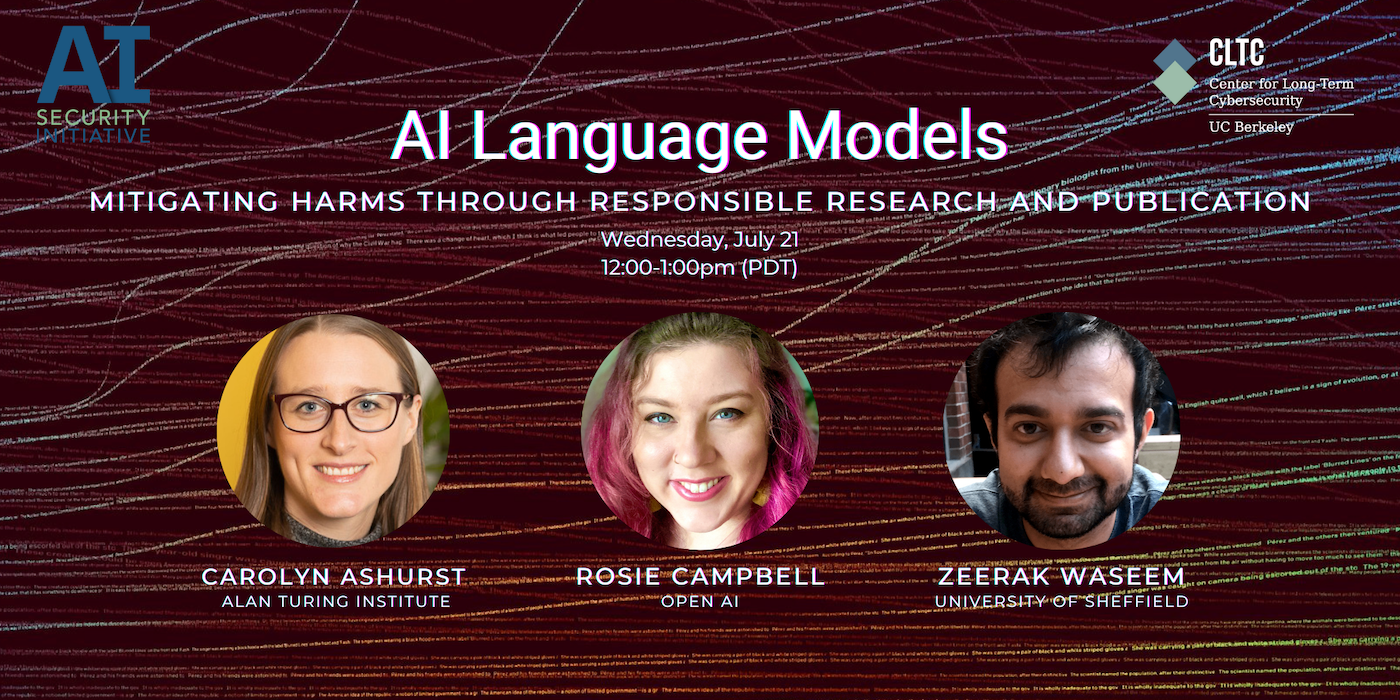

On July 21, 2021, the AI Security Initiative presented a panel discussion featuring a group of scholars working on the front lines of AI research and development. The panel included Carolyn Ashurst, Senior Research Associate in Safe and Ethical AI at the Alan Turing Institute; Rosie Campbell, Technical Program Manager at OpenAI; and Zeerak Waseem, PhD candidate at the University of Sheffield.

“AI language models offer something we have dreamed about for decades, machines that can communicate in ways that appear almost indistinguishable from humans,” explained Jessica Newman, Program Director for the AISI, who moderated the panel. “The possibilities of this technology are incredible, but there are real limitations. And the trend toward ever-larger models also comes with cost to the environment and to society. We are seeing how this technology can perpetuate inequities, and contribute to the spread of disinformation and extremism…. The discussion today will delve into the risks of AI language models, risks that people are experiencing today or could face in coming years, and on several efforts to mitigate these risks, including improving documentation and the monitoring of uses.”

The format of the panel featured each scholar offering a presentation, followed by an open discussion and question-and-answer session. In his presentation, Zeerak Waseem discussed some of the challenges related to AI language models by focusing on the context of hate speech detection. “It is a microcosm because machine-learning content moderation functions as a third party,” Waseem explained, adding that hate speech detection systems “adjudicate over what should be transmitted from the speaker to the listener, and what is acceptable.”

AI-based content moderation can be problematic, Waseem said, because “words are unreliable signals for whether something is abusive or not, or even for what the communicative context is. If I jokingly tweet to a Twitter friend for them to f*** off, versus telling a racist to f*** off, everything might look exactly the same [to an AI hate speech moderation model].”

“What happens with language modeling is that we take a disembodied perspective and we end up taking a hegemonic view on things, and this also includes values,” Waseem said. “Language models are akin to Mary Shelley’s monster. They assume a distributive logic, that we can remove something from its context and stitch it together with something else. And then we iterate over these disembodied data, as if meaning hasn’t been methodically stripped away. And this ignores questions of where the data come from, who the speakers are, and which communicative norms are acceptable to encode. What we end up with is our models that speak or act with no responsibility or intent.”

In her presentation, Rosie Campbell provided an overview of findings from a report, Managing the Risks of AI Research Six Recommendations for Responsible Publication, that she co-authored while working with Partnership on AI. “The question that I have been focusing on is, given AI’s potential for misuse, how can AI research be disseminated responsibly?” Campbell said. “In terms of large language models, we might see a real increase in autogenerated disinformation, degrading of the information ecosystem, and also people trying to generate information in high-stakes domains like medicine, which could be problematic.”

Campbell provided a summary of the key recommendations from the report, which include encouraging researchers, research leaders, and academic publications to be more transparent in acknowledging potential downstream harms of AI-related work. “This is really about trying to increase transparency and increase the normalization of thinking about the potential negative consequences of work,” Campbell said. “This is about trying to spot things as early as possible, and then be able to put in place mitigations, and also to not penalize people who are drawing attention to these issues.”

In her talk, Carolyn Ashurst spoke about the potential use of “reputational incentives” and other means of regulating the use of AI language models. “How can we incentivize responsible research and deployment?” Ashurst asked. “Firstly, through external governance, like regulation and independent audits, and through formal self-governance mechanisms from within the community, for example, with peer review, and funding decisions through resourcing decisions, particularly for government and academic spending. And finally, through changing the research environment — the research objectives and expectations about the role and responsibilities of researchers.”

Ashurst also suggested the use of oversight bodies similar to academic institutional review boards (IRBs), which are “used before research commences to check the human subjects will be treated ethically.” She noted that Stanford recently implemented an “Ethics and Society Review Board” for those applying for funding from the Stanford Institute for Human Centered AI. “This new review process is concerned with wider societal impacts and downstream consequences,” Ashurst explained.