Policymakers, technologists, and academics gathered at UC Berkeley on April 22 for the 2025 AI Policy Research Symposium, spotlighting the university’s leadership in shaping the future of responsible artificial intelligence. Hosted by the AI Policy Hub, the event brought together researchers driving the next wave of AI policy solutions at a time when governance frameworks are struggling to keep pace with innovation.

The symposium featured a keynote by Gideon Lichfield, UC Berkeley Tech Policy Fellow and former Wired editor-in-chief, who reflected on the evolving landscape of tech journalism and the growing responsibility of policymakers and innovators to align AI systems with public values.

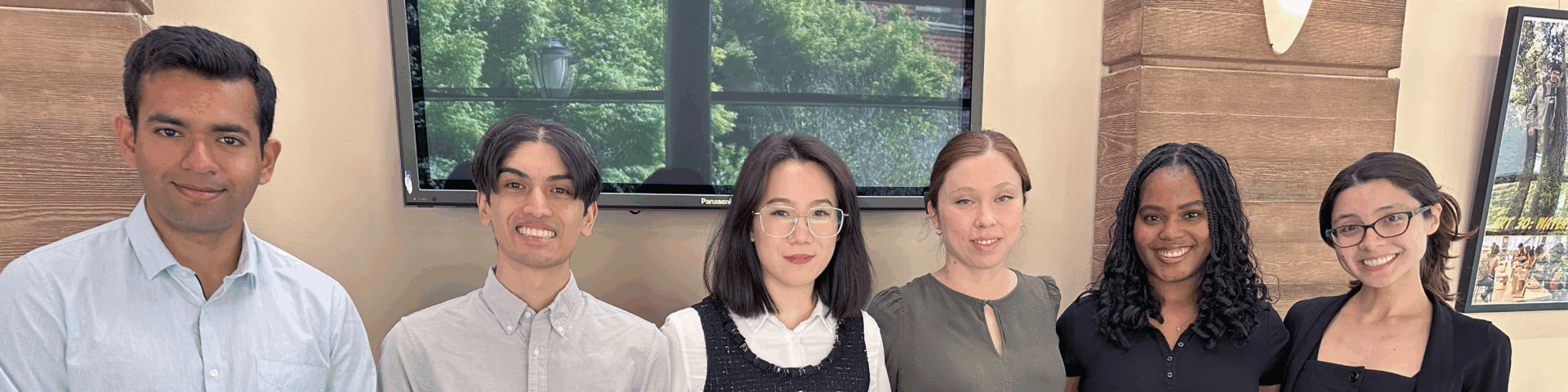

The symposium highlighted the work of the 2024–2025 AI Policy Hub fellows, who presented research on various aspects of AI policy and ethics:

- Syomantak Chaudhuri from Electrical Engineering and Computer Sciences (EECS) shared insights into how differential privacy can empower end-users to set their own privacy thresholds without compromising model reliability.

- Jaiden Fairoze from EECS discussed cryptographic methods for verifying the provenance of AI systems.

- Mengyu Han from the Goldman School of Public Policy explored effective strategies to source AI incidents to inform evidence-based AI governance strategies.

- Audrey Mitchell from the School of Law examined safeguards for AI use during legal proceedings.

- Ezinne Nwankwo from EECS provided guidance for the responsible use of data-driven tools in social service provision.

- Laura Pathak from the School of Social Welfare shared effective strategies for meaningful stakeholder engagement in AI development and use.

The event marked the culmination of the AI Policy Hub fellowship, an interdisciplinary initiative training UC Berkeley researchers to develop effective governance and policy frameworks to guide artificial intelligence, today and into the future. It is housed at the AI Security Initiative, part of the University of California, Berkeley’s Center for Long-Term Cybersecurity, and the University of California’s CITRIS Policy Lab, part of the Center for Information Technology Research in the Interest of Society and the Banatao Institute (CITRIS).

As AI continues to transform nearly every sector of society, the research presented offered a hopeful roadmap for navigating the complexity—with transparency, accountability, and equity at the forefront.