On March 31, 2022, CLTC convened a panel discussion focused on the use of “data scraping” — using software to extract publicly available data from a website or platform — for research purposes. Among the questions considered: to what extent should researchers be allowed to scrape data from platforms on which users post data that is left open to the public? What governance mechanisms could help protect researchers and privacy? And how might data scraping be handled differently in different contexts?

This panel was the second of a series of public conversations hosted by CLTC exploring the legal, technical, and policy questions and solutions that emerge when data is scraped at scale. (See this page for video and recap of the first panel in the series, “Data Scraping and the Courts,” which addressed a range of legal questions related to data scraping.)

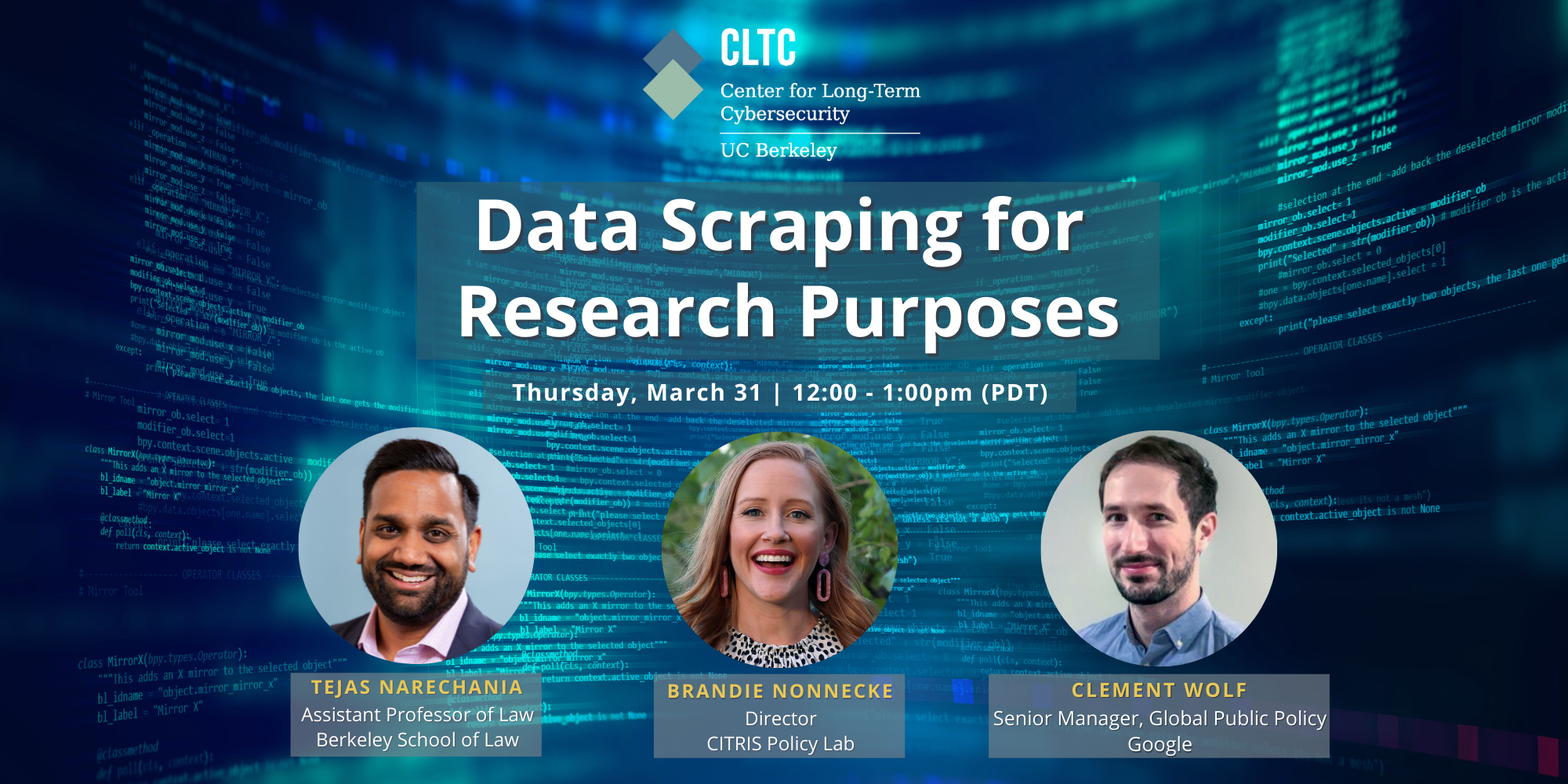

The research-focused panel included Brandie Nonnecke, Founding Director of the CITRIS Policy Lab, and Clement Wolf, Head of Information Quality Policy, Government Affairs and Public Policy for Google. The conversation was moderated by Tejas Narechania, the Robert and Nanci Corson Assistant Professor of Law at the UC Berkeley School of Law and Faculty Co-Director of the Berkeley Center for Law & Technology.

“Events like these are part of CLTC’s mission to focus on the future of digital security, and expand who participates in cybersecurity,” said Ann Cleaveland, CLTC’s Executive Director, in introductory remarks. “Today’s discussion is the second in a series on different facets of data scraping, as technological evolution forces us to rethink age old questions such as what is public and what is private.”

Wolf explained that his work at Google focuses on improving the quality of information shared online, with a goal to minimize the spread of mis- and disinformation. “The role of platforms like ours is to do the best we can to elevate high-quality information, and reduce the spread of the harmful disinformation,” Wolf said. “We’re not equipped, nor is it our responsibility, to help society answer these important questions in a way that is independent and trusted. That’s why we have researchers. That’s their role. Even though there is a lot of research published about disinformation online every single day, researchers understandably complain that these questions are really hard to answer without some sort of access to data that is currently proprietary, or that is protected by Terms of Service. That’s where these questions [about data scraping] really come to the fore.”

Nonnecke explained that the CITRIS Policy Lab, too, conducts empirical research looking at how misinformation spreads on online platforms, especially through the use of automated accounts, like bots. “As a researcher, we want more information,” she said. “We want to know, who’s behind these accounts? How is the recommender system prioritizing some Tweets and content over others? It’s clear that platforms, policymakers, and the public see that there’s a significant challenge on how to effectively identify and mitigate the spread of harmful content. We realize that this is a problem. And we need more data and more research.”

Nonnecke emphasized that platforms should be more open to researchers who want to access their data, particularly if they want to avoid cumbersome regulations. “The last thing platforms want is… to be beholden to comply with legislation that may be overly burdensome or completely misses the mark,” Nonnecke said. “I hope that platforms are supportive of wanting to open up a little bit more for there to be this independent research so that we can know more of what’s happening.”

In response, Wolf explained the reasoning behind companies’ decisions not to allow data scraping. “Let’s consider the question, why not let just anyone scrape content that’s publicly available on services? Why is that a problem?” Wolf said. “There are at least two risks that are worth bearing in mind when we talk about that. One is, if I’m a malicious actor, and what I really want is to gain or circumvent or exploit the platform’s ranking systems so as to elevate information to users… one of the things I might do is try to scrape the platform so as to better reverse engineer its ranking systems and figure out where there might be gaps. So that’s an integrity question.”

Another risk, Wolf noted, is that people may post data without awareness that it could get “scraped” and reused for other purposes, leading to privacy concerns. “The ability for anyone to just vacuum and reprocess data, then use it for different purposes, causes serious questions from privacy and ethical standpoints,” Wolf said. “It makes sense that there should be some sort of barriers to anyone just picking up any data they want from any platform. And that’s why we have these scraping protections.”

“I am sympathetic to platforms that they are facing real challenges around data privacy, security, and sharing proprietary information,” Nonnecke said. “But I think that we need to be cautious that they are not using that as a shield to protect themselves against transparency and necessary accountability. There is an incentive for platforms to open up a little bit more so that we have well scoped and effective legislation and regulation.”