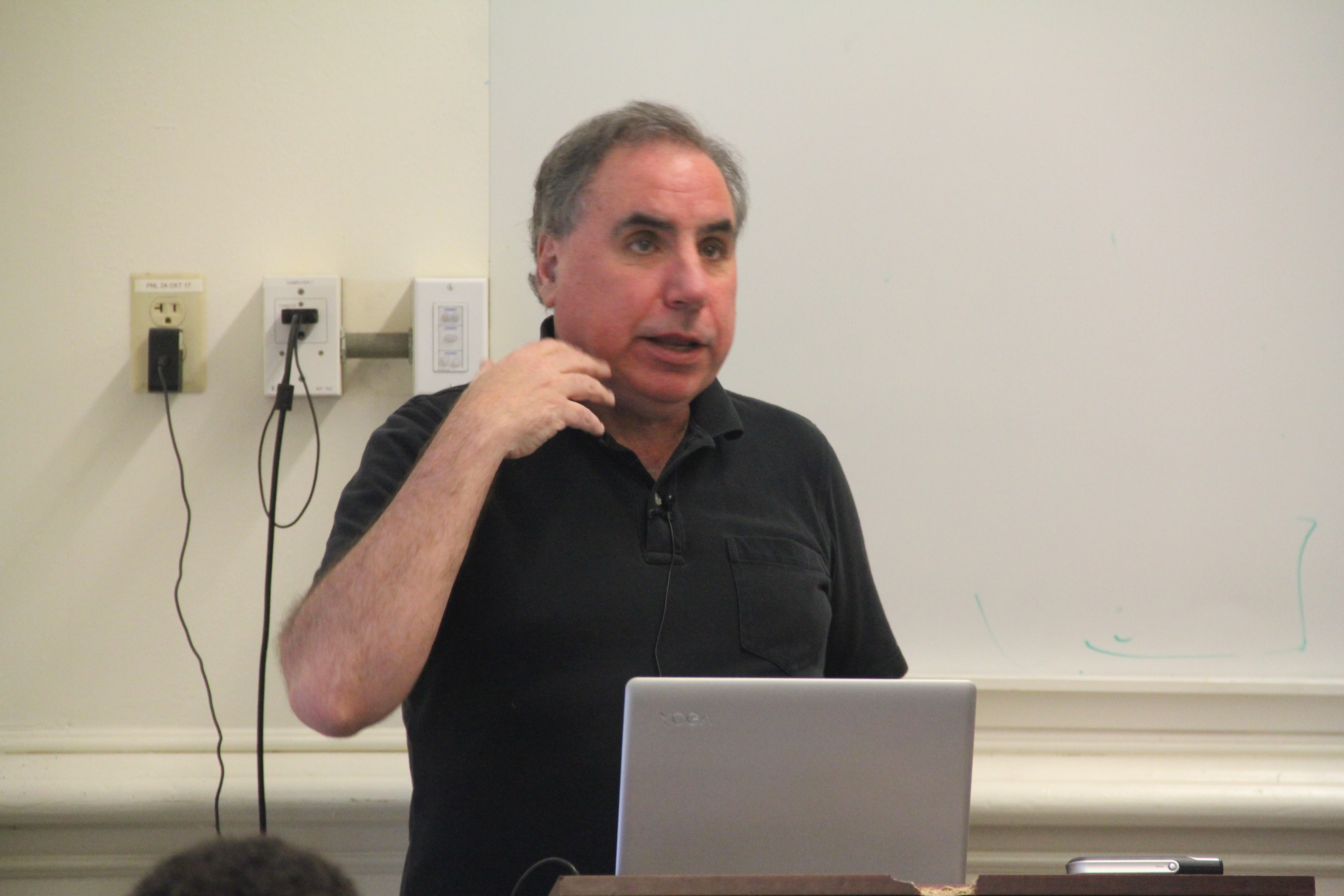

On April 26, the Center for Long-Term Cybersecurity was honored to host a presentation by Doug Tygar, Professor of Computer Science and Professor of Information Management at UC Berkeley, for the third and final event in our 2018 Spring Seminar Series.

Tygar works in the areas of computer security, privacy, and electronic commerce. His current research includes privacy, security issues in sensor webs, digital rights management, and usable computer security. His awards include a National Science Foundation Presidential Young Investigator Award, an Okawa Foundation Fellowship, a teaching award from Carnegie Mellon, and invited keynote addresses at PODC, PODS, VLDB, and many other conferences. He has written three books; his book Secure Broadcast Communication in Wired and Wireless Networks (with Adrian Perrig) is a standard reference and has been translated to Japanese.

Tygar works in the areas of computer security, privacy, and electronic commerce. His current research includes privacy, security issues in sensor webs, digital rights management, and usable computer security. His awards include a National Science Foundation Presidential Young Investigator Award, an Okawa Foundation Fellowship, a teaching award from Carnegie Mellon, and invited keynote addresses at PODC, PODS, VLDB, and many other conferences. He has written three books; his book Secure Broadcast Communication in Wired and Wireless Networks (with Adrian Perrig) is a standard reference and has been translated to Japanese.

In recent years, Tygar and his colleagues have been developing new machine learning algorithms that are robust against adversarial input. In his presentation, “Adversarial Machine Learning,” Tygar provided an overview of some of the major security challenges that developers of machine-learning algorithms must contend with. “With machine learning, the situation that we have is one where we have very good algorithms that can deal with attacks in the presence of noise,” Tygar said. “If you get noisy data coming in, your sensor isn’t quite right, or there are errors in a training set…when an adversary is allowed to shape that sort of data, we face a much more serious problem, and the algorithms we use today prove very brittle.”

Tygar noted that machine learning will be a powerful technology for Internet computer security only if the machines are trained properly to detect a wide range of different kinds of attacks. For example, while standard machine learning algorithms are robust against input data with errors from random distributions, it turns out that they are vulnerable to errors that are strategically chosen by an adversary. In his talk, Tygar demonstrated a number of methods that adversaries can use to corrupt machine learning, and he described an ongoing arms race as attackers seek to manipulate maching-learning systems.

“If I have an intrusion detection system that’s trying to detect malicious attacks, in the traditional approach, the bad guy would try to find some hole in that defense and burrow through that hole,” he said. “Attackers are getting smarter and what they’re doing is attacking during the training phase of machine learning. An attacker will slowly put in instances that will cause some type of misclassification of input data and cause an erroneous result. We’ve now seen this happen numerous times, particularly in the case of malware detectors.”

Tygar explained that such attacks are called “Byzantine” because they are carefully plotted, rather than random. “Adversaries can be patient in setting up their attacks, and they can adapt their behavior,” he said. “They can try to have attacks no longer detected as attacks, or make your defense system the ‘boy who cried wolf,’ with benign inputs classified as attacks. They can also launch a focused attack, e.g. spear-based, or they can search a classifier for blind spots.”

He noted how machine learning “classifiers” are trained with training data so that they respond appropriately in the presence of live data. Hackers can take advantage of this system by “poisoning” the inputs, Tygar explained. “Think of a bad older brother who has an infant baby sister and decides to teach his sister ‘special English,’ with lots of four-letter words, instead of the words she should be learning,” he said. “Pretty soon baby sister is talking like a sailor. That would be an example where a malicious actor was training some entity in a bad way.”

He noted how machine learning “classifiers” are trained with training data so that they respond appropriately in the presence of live data. Hackers can take advantage of this system by “poisoning” the inputs, Tygar explained. “Think of a bad older brother who has an infant baby sister and decides to teach his sister ‘special English,’ with lots of four-letter words, instead of the words she should be learning,” he said. “Pretty soon baby sister is talking like a sailor. That would be an example where a malicious actor was training some entity in a bad way.”

“The search for adversarial machine learning algorithms is thrilling,” Tygar explained in his abstract for the talk. “It combines the best work in robust statistics, machine learning, and computer security. One significant tool security researchers use is the ability to look at attack scenarios from the adversary’s perspective (the black hat approach), and in that way, show the limits of computer security techniques. In the field of adversarial machine learning, this approach yields fundamental insights. Even though a growing number of adversarial machine learning algorithms are available, the black hat approach shows us that there are some theoretical limits to their effectiveness.”

Thanks to everyone who came to our three Spring Seminars—and please stay tuned to the CLTC website for information on our Fall 2018 Seminar Series!